DICOM (Digital Imaging and Communications in Medicine) is a standard for handling, storing, printing, and transmitting information in medical imaging. It includes a file format definition and a network communications protocol. The communication protocol is an application protocol that uses TCP/IP

to communicate between systems. DICOM files can be exchanged between

two entities that are capable of receiving image and patient data in

DICOM format. The National Electrical Manufacturers Association (NEMA) holds the copyright to this standard.[1] It was developed by the DICOM Standards Committee, whose members[2] are also partly members of NEMA.[3]

DICOM enables the integration of scanners, servers, workstations, printers, and network hardware from multiple manufacturers into a picture archiving and communication system (PACS). The different devices come with DICOM conformance statements which clearly state which DICOM classes they support. DICOM has been widely adopted by hospitals and is making inroads in smaller applications like dentists' and doctors' offices.

In the beginning of the 1980s, it was very difficult for anyone other than manufacturers of computed tomography or magnetic resonance imaging devices to decode the images that the machines generated. Radiologists and medical physicists wanted to use the images for dose-planning for radiation therapy. ACR and NEMA joined forces and formed a standard committee in 1983. Their first standard, ACR/NEMA 300, was released in 1985. Very soon after its release, it became clear that improvements were needed. The text was vague and had internal contradictions.

In 1988 the second version was released. This version gained more acceptance among vendors. The image transmission was specified as over a dedicated 25 differential (EIA-485) pair cable. The first demonstration of ACR/NEMA V2.0 interconnectivity technology was held at Georgetown University, May 21–23, 1990. Six companies participated in this event, DeJarnette Research Systems, General Electric Medical Systems, Merge Technologies, Siemens Medical Systems, Vortech (acquired by Kodak that same year) and 3M. Commercial equipment supporting ACR/NEMA 2.0 was presented at the annual meeting of the Radiological Society of North America (RSNA) in 1990 by these same vendors. Many soon realized that the second version also needed improvement. Several extensions to ACR/NEMA 2.0 were created, like Papyrus (developed by the University Hospital of Geneva, Switzerland) and SPI (Standard Product Interconnect), driven by Siemens Medical Systems and Philips Medical Systems.

The first large-scale deployment of ACR/NEMA technology was made in 1992 by the US Army and Air Force, as part of the MDIS (Medical Diagnostic Imaging Support) program run out of Ft. Detrick, Maryland. Loral Aerospace and Siemens Medical Systems led a consortium of companies in deploying the first US military PACS (Picture Archiving and Communications System) at all major Army and Air Force medical treatment facilities and teleradiology nodes at a large number of US military clinics. DeJarnette Research Systems and Merge Technologies provided the modality gateway interfaces from third party imaging modalities to the Siemens SPI network. The Veterans Administration and the Navy also purchased systems off this contract.

In 1993 the third version of the standard was released. Its name was then changed to "DICOM" so as to improve the possibility of international acceptance as a standard. New service classes were defined, network support added and the Conformance Statement was introduced. Officially, the latest version of the standard is still 3.0. However, it has been constantly updated and extended since 1993. Instead of using the version number, the standard is often version-numbered using the release year, like "the 2007 version of DICOM".

While the DICOM standard has achieved a near universal level of acceptance amongst medical imaging equipment vendors and healthcare IT organizations, the standard has its limitations. DICOM is a standard directed at addressing technical interoperability issues in medical imaging. It is not a framework or architecture for achieving a useful clinical workflow. RSNA's Integrating the Healthcare Enterprise (IHE) initiative layered on top of DICOM (and HL-7) provides this final piece of the medical imaging interoperability puzzle.

A DICOM data object consists of a number of attributes, including items such as name, ID, etc., and also one special attribute containing the image pixel data (i.e. logically, the main object has no "header" as such: merely a list of attributes, including the pixel data). A single DICOM object can have only one attribute containing pixel data. For many modalities, this corresponds to a single image. But note that the attribute may contain multiple "frames", allowing storage of cine loops or other multi-frame data. Another example is NM data, where an NM image, by definition, is a multi-dimensional multi-frame image. In these cases, three- or four-dimensional data can be encapsulated in a single DICOM object. Pixel data can be compressed using a variety of standards, including JPEG, JPEG Lossless, JPEG 2000, and Run-length encoding (RLE). LZW (zip) compression can be used for the whole data set (not just the pixel data), but this has rarely been implemented.

DICOM uses three different Data Element encoding schemes. With Explicit Value Representation (VR) Data Elements, for VRs that are not OB, OW, OF, SQ, UT, or UN, the format for each Data Element is: GROUP (2 bytes) ELEMENT (2 bytes) VR (2 bytes) LengthInByte (2 bytes) Data (variable length). For the other Explicit Data Elements or Implicit Data Elements, see section 7.1 of Part 5 of the DICOM Standard.

The same basic format is used for all applications, including network and file usage, but when written to a file, usually a true "header" (containing copies of a few key attributes and details of the application which wrote it) is added.

In addition to a Value Representation, each attribute also has a

Value Multiplicity to indicate the number of data elements contained in

the attribute. For character string value representations, if more than

one data element is being encoded, the successive data elements are

separated by the backslash character "\".

DICOM restricts the filenames on DICOM media to 8 characters (some systems wrongly use 8.3, but this does not conform to the standard). No information must be extracted from these names (PS3.10 Section 6.2.3.2). This is a common source of problems with media created by developers who did not read the specifications carefully. This is a historical requirement to maintain compatibility with older existing systems. It also mandates the presence of a media directory, the DICOMDIR file, which provides index and summary information for all the DICOM files on the media. The DICOMDIR information provides substantially greater information about each file than any filename could, so there is less need for meaningful file names.

DICOM files typically have a .dcm file extension if they are not part of a DICOM media (which requires them to be without extension).

The MIME type for DICOM files is defined by RFC 3240 as application/dicom.

The Uniform Type Identifier type for DICOM files is org.nema.dicom.

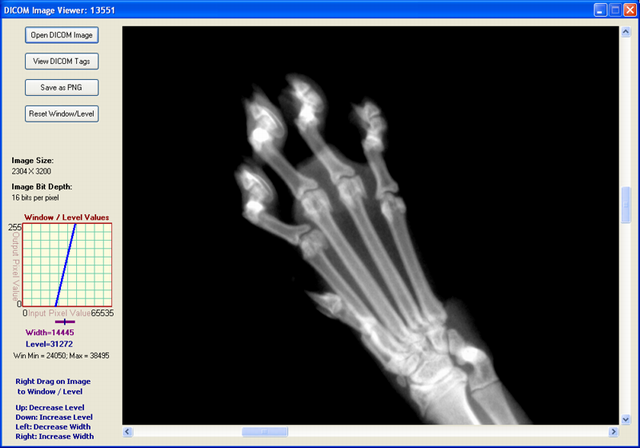

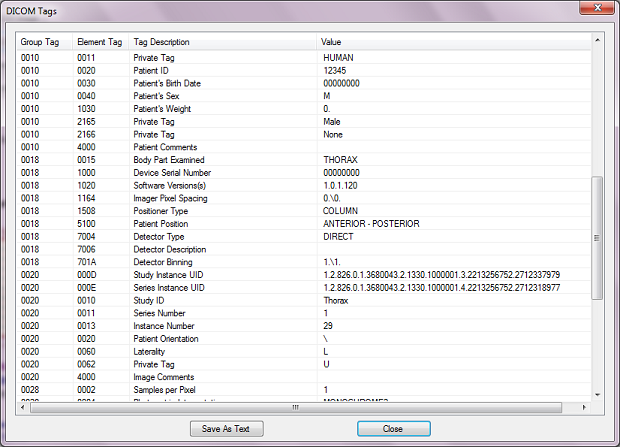

There is also an ongoing media exchange test and "connectathon" process for CD media and network operation that is organized by the IHE organization. MicroDicom is free Windows software for reading DICOM data.

DICOM enables the integration of scanners, servers, workstations, printers, and network hardware from multiple manufacturers into a picture archiving and communication system (PACS). The different devices come with DICOM conformance statements which clearly state which DICOM classes they support. DICOM has been widely adopted by hospitals and is making inroads in smaller applications like dentists' and doctors' offices.

History

DICOM is the third version of a standard developed by American College of Radiology (ACR) and National Electrical Manufacturers Association (NEMA).In the beginning of the 1980s, it was very difficult for anyone other than manufacturers of computed tomography or magnetic resonance imaging devices to decode the images that the machines generated. Radiologists and medical physicists wanted to use the images for dose-planning for radiation therapy. ACR and NEMA joined forces and formed a standard committee in 1983. Their first standard, ACR/NEMA 300, was released in 1985. Very soon after its release, it became clear that improvements were needed. The text was vague and had internal contradictions.

In 1988 the second version was released. This version gained more acceptance among vendors. The image transmission was specified as over a dedicated 25 differential (EIA-485) pair cable. The first demonstration of ACR/NEMA V2.0 interconnectivity technology was held at Georgetown University, May 21–23, 1990. Six companies participated in this event, DeJarnette Research Systems, General Electric Medical Systems, Merge Technologies, Siemens Medical Systems, Vortech (acquired by Kodak that same year) and 3M. Commercial equipment supporting ACR/NEMA 2.0 was presented at the annual meeting of the Radiological Society of North America (RSNA) in 1990 by these same vendors. Many soon realized that the second version also needed improvement. Several extensions to ACR/NEMA 2.0 were created, like Papyrus (developed by the University Hospital of Geneva, Switzerland) and SPI (Standard Product Interconnect), driven by Siemens Medical Systems and Philips Medical Systems.

The first large-scale deployment of ACR/NEMA technology was made in 1992 by the US Army and Air Force, as part of the MDIS (Medical Diagnostic Imaging Support) program run out of Ft. Detrick, Maryland. Loral Aerospace and Siemens Medical Systems led a consortium of companies in deploying the first US military PACS (Picture Archiving and Communications System) at all major Army and Air Force medical treatment facilities and teleradiology nodes at a large number of US military clinics. DeJarnette Research Systems and Merge Technologies provided the modality gateway interfaces from third party imaging modalities to the Siemens SPI network. The Veterans Administration and the Navy also purchased systems off this contract.

In 1993 the third version of the standard was released. Its name was then changed to "DICOM" so as to improve the possibility of international acceptance as a standard. New service classes were defined, network support added and the Conformance Statement was introduced. Officially, the latest version of the standard is still 3.0. However, it has been constantly updated and extended since 1993. Instead of using the version number, the standard is often version-numbered using the release year, like "the 2007 version of DICOM".

While the DICOM standard has achieved a near universal level of acceptance amongst medical imaging equipment vendors and healthcare IT organizations, the standard has its limitations. DICOM is a standard directed at addressing technical interoperability issues in medical imaging. It is not a framework or architecture for achieving a useful clinical workflow. RSNA's Integrating the Healthcare Enterprise (IHE) initiative layered on top of DICOM (and HL-7) provides this final piece of the medical imaging interoperability puzzle.

Derivations

There are some derivations from the DICOM standard into other application areas. These include:- DICONDE - Digital Imaging and Communication in Nondestructive Evaluation, was established in 2004 as a way for nondestructive testing manufacturers and users to share image data.[5]

- DICOS - Digital Imaging and Communication in Security was established in 2009 to be used for image sharing in airport security.[6]

DICOM data format

DICOM differs from some, but not all, data formats in that it groups information into data sets. That means that a file of a chest x-ray image, for example, actually contains the patient ID within the file, so that the image can never be separated from this information by mistake. This is similar to the way that image formats such as JPEG can also have embedded tags to identify and otherwise describe the image.A DICOM data object consists of a number of attributes, including items such as name, ID, etc., and also one special attribute containing the image pixel data (i.e. logically, the main object has no "header" as such: merely a list of attributes, including the pixel data). A single DICOM object can have only one attribute containing pixel data. For many modalities, this corresponds to a single image. But note that the attribute may contain multiple "frames", allowing storage of cine loops or other multi-frame data. Another example is NM data, where an NM image, by definition, is a multi-dimensional multi-frame image. In these cases, three- or four-dimensional data can be encapsulated in a single DICOM object. Pixel data can be compressed using a variety of standards, including JPEG, JPEG Lossless, JPEG 2000, and Run-length encoding (RLE). LZW (zip) compression can be used for the whole data set (not just the pixel data), but this has rarely been implemented.

DICOM uses three different Data Element encoding schemes. With Explicit Value Representation (VR) Data Elements, for VRs that are not OB, OW, OF, SQ, UT, or UN, the format for each Data Element is: GROUP (2 bytes) ELEMENT (2 bytes) VR (2 bytes) LengthInByte (2 bytes) Data (variable length). For the other Explicit Data Elements or Implicit Data Elements, see section 7.1 of Part 5 of the DICOM Standard.

The same basic format is used for all applications, including network and file usage, but when written to a file, usually a true "header" (containing copies of a few key attributes and details of the application which wrote it) is added.

DICOM value representations

Extracted from Chapter 6.2 of- PS 3.5: Data Structure and EncodingPDF (1.43 MiB)

| Value Representation | Description |

|---|---|

| AE | Application Entity |

| AS | Age String |

| AT | Attribute Tag |

| CS | Code String |

| DA | Date |

| DS | Decimal String |

| DT | Date/Time |

| FL | Floating Point Single (4 bytes) |

| FD | Floating Point Double (8 bytes) |

| IS | Integer String |

| LO | Long String |

| LT | Long Text |

| OB | Other Byte |

| OF | Other Float |

| OW | Other Word |

| PN | Person Name |

| SH | Short String |

| SL | Signed Long |

| SQ | Sequence of Items |

| SS | Signed Short |

| ST | Short Text |

| TM | Time |

| UI | Unique Identifier |

| UL | Unsigned Long |

| UN | Unknown |

| US | Unsigned Short |

| UT | Unlimited Text |

DICOM services

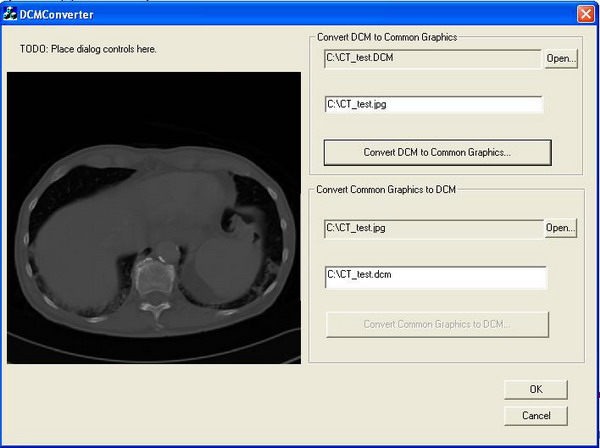

DICOM consists of many different services, most of which involve transmission of data over a network, and the file format below is a later and relatively minor addition to the standard.Store

The DICOM Store service is used to send images or other persistent objects (structured reports, etc.) to a PACS or workstation.Storage commitment

The DICOM storage commitment service is used to confirm that an image has been permanently stored by a device (either on redundant disks or on backup media, e.g. burnt to a CD). The Service Class User (SCU: similar to a client), a modality or workstation, etc., uses the confirmation from the Service Class Provider (SCP: similar to a server), an archive station for instance, to make sure that it is safe to delete the images locally.Query/Retrieve

This enables a workstation to find lists of images or other such objects and then retrieve them from a PACS.Modality worklist

This enables a piece of imaging equipment (a modality) to obtain details of patients and scheduled examinations electronically, avoiding the need to type such information multiple times (and the mistakes caused by retyping).Modality performed procedure step

A complementary service to Modality Worklist, this enables the modality to send a report about a performed examination including data about the images acquired, beginning time, end time, and duration of a study, dose delivered, etc. It helps give the radiology department a more precise handle on resource (acquisition station) use. Also known as MPPS, this service allows a modality to better coordinate with image storage servers by giving the server a list of objects to send before or while actually sending such objects.Printing

The DICOM Printing service is used to send images to a DICOM Printer, normally to print an "X-Ray" film. There is a standard calibration (defined in DICOM Part 14) to help ensure consistency between various display devices, including hard copy printout.Off-line media (DICOM files)

The off-line media files correspond to Part 10 of the DICOM standard. It describes how to store medical imaging information on removable media. Except for the data set containing, for example, an image and demography, it's also mandatory to include the File Meta Information.DICOM restricts the filenames on DICOM media to 8 characters (some systems wrongly use 8.3, but this does not conform to the standard). No information must be extracted from these names (PS3.10 Section 6.2.3.2). This is a common source of problems with media created by developers who did not read the specifications carefully. This is a historical requirement to maintain compatibility with older existing systems. It also mandates the presence of a media directory, the DICOMDIR file, which provides index and summary information for all the DICOM files on the media. The DICOMDIR information provides substantially greater information about each file than any filename could, so there is less need for meaningful file names.

DICOM files typically have a .dcm file extension if they are not part of a DICOM media (which requires them to be without extension).

The MIME type for DICOM files is defined by RFC 3240 as application/dicom.

The Uniform Type Identifier type for DICOM files is org.nema.dicom.

There is also an ongoing media exchange test and "connectathon" process for CD media and network operation that is organized by the IHE organization. MicroDicom is free Windows software for reading DICOM data.

Application areas

| Modality | Description |

|---|---|

| AS | Modality of type Angioscopy - Retired |

| BI | Modality of type Biomagnetic Imaging |

| CD | Modality of type Color Flow Doppler - Retired 2008 |

| CF | Modality of type Cinefluorography - Retired |

| CP | Modality of type Colposcopy - Retired |

| CR | Modality of type Computed Radiography |

| CS | Modality of type Cystoscopy - Retired |

| CT | Modality of type Computed Tomography |

| DD | Modality of type Duplex Doppler - Retired 2008 |

| DG | Modality of type Diaphanography |

| DM | Modality of type Digital Microscopy - Retired |

| DS | Modality of type Digital Subtraction Angiography - Retired |

| DX | Modality of type Digital Radiography |

| EC | Modality of type Echocardiography - Retired |

| ECG | Modality of type Electrocardiograms |

| EM | Modality of type Electron Microscope |

| ES | Modality of type Endoscopy |

| FA | Modality of type Fluorescein Angiography - Retired |

| FS | Modality of type Fundoscopy - Retired |

| GM | Modality of type General Microscopy |

| HC | Modality of type Hard Copy |

| LP | Modality of type Laparoscopy - Retired |

| LS | Modality of type Laser Surface Scan |

| MA | Modality of type Magnetic Resonance Angiography (retired) |

| MG | Modality of type Mammography |

| MR | Modality of type Magnetic Resonance |

| MS | Modality of type Magnetic Resonance Spectroscopy - Retired |

| NM | Modality of type Nuclear Medicine |

| OP | Modality of type Ophthalmic Photography |

| OPM | Modality of type Ophthalmic Mapping |

| OPR | Modality of type Ophthalmic Refraction |

| OPV | Modality of type Ophthalmic Visual Field |

| OT | Modality of type Other |

| PT | Modality of type Positron Emission Tomography (PET) |

| RD | Modality of type Radiotherapy Dose (a.k.a. RTDOSE) |

| RF | Modality of type Radio Fluoroscopy |

| RG | Modality of type Radiographic Imaging (conventional film screen) |

| RTIMAG | Modality of type Radiotherapy Image |

| RP | Modality of type Radiotherapy Plan (a.k.a. RTPLAN) |

| RS | Modality of type Radiotherapy Structure Set (a.k.a. RTSTRUCT) |

| RT | Modality of type Radiation Therapy |

| SC | Modality of type Secondary Capture |

| SM | Modality of type Slide Microscopy |

| SR | Modality of type Structured Reporting |

| ST | Modality of type Single-Photon Emission Computed Tomography (retired 2008) |

| TG | Modality of type Thermography |

| US | Modality of type Ultrasound |

| VF | Modality of type Videofluorography - Retired |

| VL | Modality of type Visible Light |

| XA | Modality of type X-Ray Angiography |

| XC | Modality of type External Camera (Photography) |

DICOM transmission protocol port numbers over IP

DICOM have reserved the following TCP and UDP port numbers by the Internet Assigned Numbers Authority (IANA):- 104 well-known port for DICOM over TCP or UDP. Since 104 is in the reserved subset, many operating systems require special privileges to use it.

- 2761 registered port for DICOM using Integrated Secure Communication Layer (ISCL) over TCP or UDP

- 2762 registered port for DICOM using Transport Layer Security (TLS) over TCP or UDP

- 11112 registered port for DICOM using standard, open communication over TCP or UDP